|

| Reviews and Templates for Expression We |

How to curb runaway power in the data center

Data centers represent a skyrocketing component of any enterprise's energy budget, and therefore a major share of operational costs. Best energy management practices can help contain these costs, and simultaneously put IT and facilities teams on an environmentally responsible path that aligns the corporate data centers with EPA energy standards.

The Scope of the Problem -- and the Opportunity

Surveys of all sizes and types of data centers identify many categories of wasted energy. For example, approximately 10 to 15 percent of all data center servers are idle (i.e., not processing useful work). An average server draws about 400 watts of power, for an annual cost of $800 or more. This adds up to billions of dollars of wasted energy, cooling, and management costs every year in the U.S. alone.

Traditional power management approaches have failed to curb this or other instances of wasted energy. As a result, data center managers have routinely over-budgeted power and cooling to accommodate spikes in demand and high-priority needs, and to avoid "hot spots" that would otherwise negatively impact server performance.

Finding Where the Energy is Going

The potential for savings and the high cost of energy have driven demand for new tools relating to energy management. Most of the resulting power management tools let IT managers examine the returned-air temperature at the air-conditioning units, and perhaps the power consumption for each rack in the data center. However, most lack visibility at the individual server level, and base their calculations on modeled or estimated data that can deviate from actual consumption by as much as 40 percent.

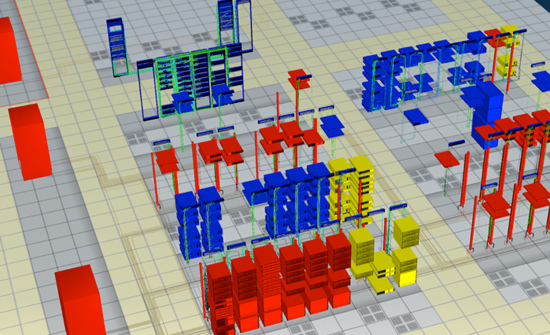

In contrast, a new class of holistic energy and cooling management solutions has emerged that offer fine-grained levels of monitoring. The latest innovations in this area focus on server inlet temperatures, and provide aggregation across a row or room, to create real-time thermal maps of server assets (see Figure 1).

Figure 1. Thermal patterns in the data center help identify "hot spots," as the first step in optimizing power distribution and cooling system efficiency. Courtesy of Intel Corp. and iTRACS.

Figure 1. Thermal patterns in the data center help identify "hot spots," as the first step in optimizing power distribution and cooling system efficiency. Courtesy of Intel Corp. and iTRACS.

Similarly, real-time power consumption by servers and storage devices can also be monitored and logged, leading to highly optimized rack provisioning and capacity planning within the data center. For example, to provision a rack of ten servers, each with a 650 Watts power supply rating, a data center manager might test a fully-loaded server and arrive at a requirement of 400 Watts per server, or 4 KW per rack of ten servers.

Alternatively, with a real-time monitoring tool, the data center manager can accurately determine the typical maximum power draw in a production environment. Field studies have shown that this approach can help boost rack densities by as much as 60 percent (or up to 16 servers per rack, in this particular example), and can support the accurate capping of power per rack to protect equipment in the unlikely event that demand spikes above the defined power level.

Perhaps even more important, advanced energy management is helping data center architects intelligently allocate power during emergencies. Equipped with accurate power characteristics, uninterrupted power supplies (UPSs) can be configured to deliver longer operation times to high-priority servers during power outages.

Thermal and hardware power consumption data can also be logged and used for trending analysis. Temperature data can benefit in-depth airflow studies for improving cooling and airflow, and lead to more energy-efficient designs of integrated facilities systems.

Next page: The bottom line, and the limits of efficiency

Bottom line, the granularity and accuracy of the emerging energy management solutions make it possible to fine-tune power distribution and cooling. Instead of designing data centers based on demand spikes and worst-case scenarios, the expanded understanding offered by advanced energy management solutions can promote energy-efficient data center designs and policies.

Setting Practical Limits

Armed with power and temperature data for servers, racks, rows, and entire data centers, IT managers can encourage end-user behaviors that promote energy conservation. The same energy management solutions that monitor the data center can introduce controls that enforce green policies. For example, energy management solutions can automatically generate alerts and trigger power adjustments whenever pre-defined power limits have been exceeded.

Simply capping the power consumption of individual servers or groups of servers is not enough, however, because server performance and level of service are directly tied to power levels. Therefore, advanced energy management solutions dynamically balance power and performance by adjusting the processor operating frequencies. This requires a tightly integrated solution that can interact with the server operating system or hypervisor, based on defined thresholds.

Field tests of state-of-the-art energy management solutions have proven the efficacy of an intelligent approach for lowering server power consumption by as much as 20 percent without impacting performance.

Other Benefits

Besides helping to fine-tune energy-efficient data center designs, the ability to identify "hot spots" minimizes the risks of power overloads and related server failures. Proactive energy management solutions provide insights into the power patterns leading up to problematic events, and offer remedial controls that avoid wasted power, equipment failures, and service disruptions.

Companies can also use the new energy management solutions for power-based metering and energy cost charge-backs. The ability to attach energy costs to data center services raises awareness about resource utilization, and provides further motivation for embracing environmentally, and economically, sustainable business practices.

Based on any one of the previously mentioned benefits, the case for energy management is compelling. And, as explained at the top of this article, there is incredible upside. The energy being wasted on idle data center servers alone yields an attractive ROI for energy management solution deployments, with businesses currently able to achieve 20- to 40-percent reductions of waste in this area.

|

|

|

|

Copyright 2011 Energy and Technical Services Ltd. All Rights Reserved. Energyts.com |